Researched and written by Evan – ~4 min read

Accessibility isn’t optional; it’s essential. For people who are deaf or hard of hearing, spoken content can otherwise be lost. That’s where AI interpreting and live captioning step in: real-time text or audio support that lets everyone follow along, no matter their hearing ability.

But how good is “good enough”? Let’s explore how these technologies empower inclusion, where they still fall short, and how a professional service can bridge the gap.

Why live captions & interpreting matter

- Equal access to information: Captions let hearing-impaired participants see spoken words in text form. Interpreting (audio/text) ensures they don’t miss nuance or critical details.

- Better in noisy environments: Large venues, open spaces, or hybrid events often struggle acoustically. Captions help everyone, especially those with hearing challenges, stay on track regardless of background noise.

- Support for non-native speakers: Captions help people whose first language isn’t the spoken one. Seeing text reinforces comprehension.

- Regulatory requirements: Many jurisdictions now require events, public broadcasts, and services to include captioning or language access as part of accessibility laws.

- Enhanced engagement & retention: When people can read along as they listen, their understanding improves and attention is better retained.

How AI interpreting & live captioning work today

Real-time captioning typically relies on speech recognition (ASR) technology to convert spoken audio into text. Advanced systems may also include translation into multiple languages. For hybrid or virtual events, these captions are streamed in sync with the audio/video feed. Interpreting with AI adds another layer: spoken source → translation → audio or text output.

The limitations you should know

While AI interpreting and captioning bring great promise, some caveats remain, especially when compared to human interpreting:

- Text + audio overlap (echo effect)

A major issue with some live translation devices, like the AirPods live translation feature, is that the listener hears both the original voice and the translated voice simultaneously. This can be confusing, disruptive, and reduce clarity.

- Accuracy & domain vocabulary

AI models sometimes misinterpret jargon, names, acronyms, or accented speech, especially in fast or overlapping speech.

- Latency & synchronization

Even a small delay between speech and captions or translation can break flow. In conversations, this lag makes responses feel fallible.

- Language coverage & dialects

Not all languages or dialects are supported, especially local or region-specific ones.

- Context, emotion & nuance

Humans read tone, emphasize, inject pauses, and interpret idiomatic or cultural meaning. AI cannot reliably do that yet.

Because of these limitations, many accessibility experts recommend combining AI with human interpreters as a safety net.

How Langpros approaches inclusive interpreting + captions

At Langpros, we don’t rely solely on earbuds or generic auto-captioning. Instead, we adopt a hybrid, layered model that balances automation, human oversight, and premium AV technology.

Here’s how we do it:

- AI for lightweight needs

For small team meetings, informal sessions, or low-budget settings, we offer AI interpreting / captioning option. Users connect from their smartphone or laptop, select a language, and get live AI audio or captions. It’s efficient, cost-effective, and convenient, but best suited to lower-stakes scenarios.

- Human + AI in parallel for major events

For conferences, summits, and formal events, we combine AI tools with professional interpreters. Human interpreters handle the main, high-stakes languages to ensure accuracy, nuance, and cultural context, while AI is used for live captioning and lower-priority languages, helping expand accessibility and significantly reduce costs without compromising quality.

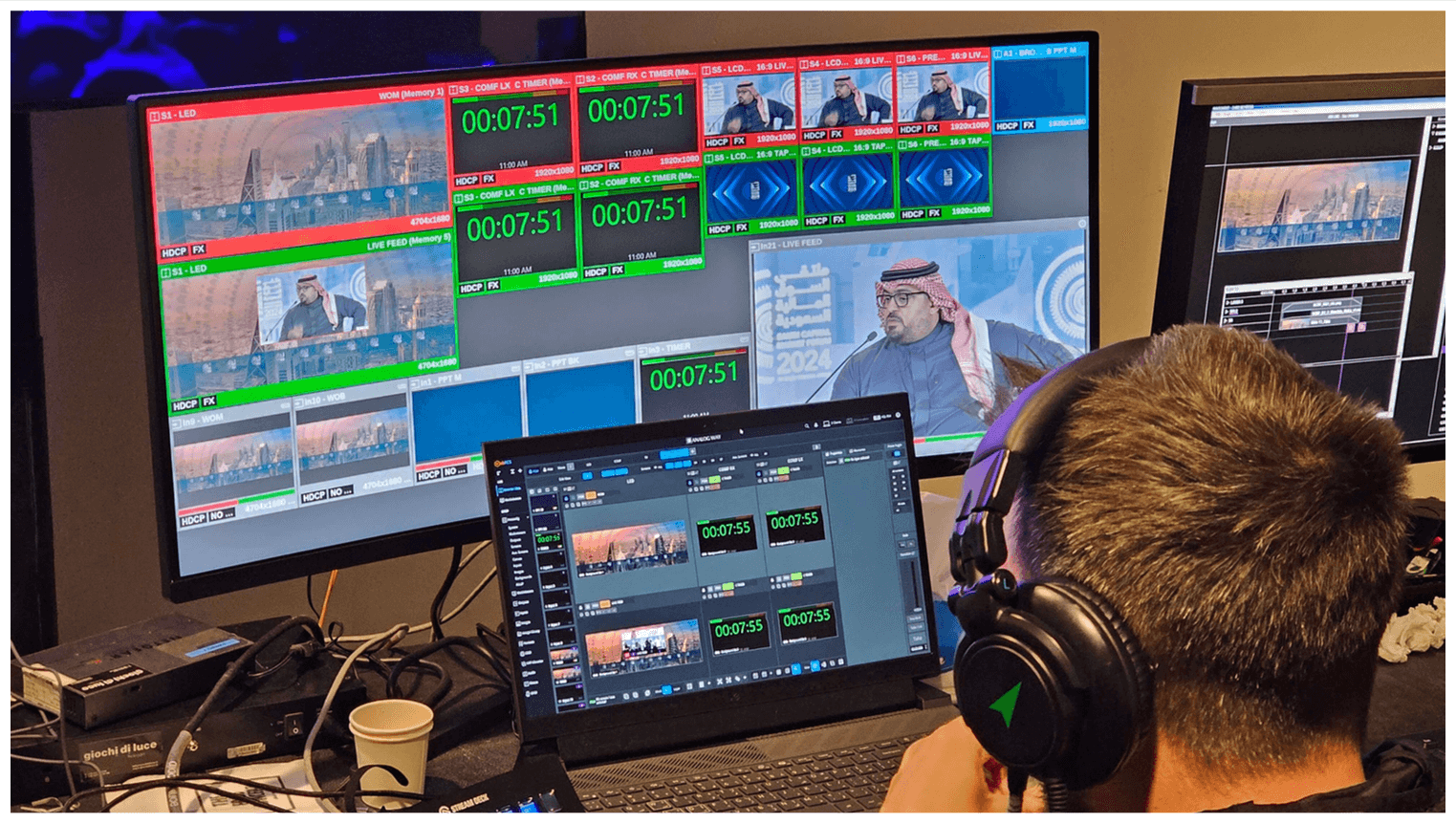

- High-end AV infrastructure

Our AV setups (booths, PTZ cameras, push-to-talk mics, streaming systems) ensure that audio and video are crystal-clear, making it easier for captioning systems to perform and minimizing distortion or noise interference.

- Accessibility advocacy & compliance

We follow best practices: live captions must be visible, positioned correctly, and customizable. Our systems can support multi-language captions, and synchronized display.

When and how to use these tools

Here’s a rough guide to when AI interpreting & captioning, alone or in combination, makes sense:

| Scenario | Ideal Approach |

| Small internal meeting, minimal budget | AI Interpreting + auto captioning may suffice |

| Panel discussion, public event | Human interpreters + AI Interpreting/captioning |

| High-stakes negotiations or diplomatic meetings or Multilingual conference | Human interpreting + AI or professional captioning |

A future worth writing for

AI interpreting and live captions hold tremendous potential to remove barriers, amplify voices, and make events truly inclusive. Researchers are pushing boundaries too: for example, augmented reality caption systems that add emotional cues to text, blending facial expressions or tone into captions themselves.

But until those systems mature, the safest, most effective path remains technology guided by human expertise.

If you’re designing your next inclusive event, where every participant, regardless of hearing ability, deserves to follow, engage, and be seen, Langpros has the tools, experience, and sensitivity to deliver. Let us help you make communication accessible and seamless. Get a free quote HERE.